/ 12 min read

Variational Autoencoder: Intuition and Implementation

There are two generative models facing neck to neck in the data generation business right now: Generative Adversarial Nets (GAN) and Variational Autoencoder (VAE). These two models have different take on how the models are trained. GAN is rooted in game theory, its objective is to find the Nash Equilibrium between discriminator net and generator net. On the other hand, VAE is rooted in bayesian inference, i.e. it wants to model the underlying probability distribution of data so that it could sample new data from that distribution.

In this post, we will look at the intuition of VAE model and its implementation in Keras.

VAE: Formulation and Intuition

Suppose we want to generate a data. Good way to do it is first to decide what kind of data we want to generate, then actually generate the data. For example, say, we want to generate an animal. First, we imagine the animal: it must have four legs, and it must be able to swim. Having those criteria, we could then actually generate the animal by sampling from the animal kingdom. Lo and behold, we get Platypus!

From the story above, our imagination is analogous to latent variable. It is often useful to decide the latent variable first in generative models, as latent variable could describe our data. Without latent variable, it is as if we just generate data blindly. And this is the difference between GAN and VAE: VAE uses latent variable, hence it’s an expressive model.

Alright, that fable is great and all, but how do we model that? Well, let’s talk about probability distribution.

Let’s define some notions:

Our objective here is to model the data, hence we want to find

that is, we marginalize out

Now if only we know

The idea of VAE is to infer

But the problem is, we have to infer that distribution

Alright, now let’s say we want to infer

Recall the notations above, there are two things that we haven’t use, namely

Notice that the expectation is over

If we look carefully at the right hand side of the equation, we would notice that it could be rewritten as another KL divergence. So let’s do that by first rearranging the sign.

And this is it, the VAE objective function:

At this point, what do we have? Let’s enumerate:

We might feel familiar with this kind of structure. And guess what, it’s the same structure as seen in Autoencoder! That is,

VAE: Dissecting the Objective

It turns out, VAE objective function has a very nice interpretation. That is, we want to model our data, which described by

That model then could be found by maximazing over some mapping from latent variable to data

As we might already know, maximizing

What about

Having

Above,

In practice, however, it’s better to model

Implementation in Keras

First, let’s implement the encoder net

from tensorflow.examples.tutorials.mnist import input_datafrom keras.layers import Input, Dense, Lambdafrom keras.models import Modelfrom keras.objectives import binary_crossentropyfrom keras.callbacks import LearningRateScheduler

import numpy as npimport matplotlib.pyplot as pltimport keras.backend as Kimport tensorflow as tf

m = 50n_z = 2n_epoch = 10

# Q(z|X) -- encoder

inputs = Input(shape=(784,))h_q = Dense(512, activation='relu')(inputs)mu = Dense(n_z, activation='linear')(h_q)log_sigma = Dense(n_z, activation='linear')(h_q)That is, our

However, we are now facing a problem. How do we get

There is, however a trick called reparameterization trick, which makes the network differentiable. Reparameterization trick basically divert the non-differentiable operation out of the network, so that, even though we still involve a thing that is non-differentiable, at least it is out of the network, hence the network could still be trained.

The reparameterization trick is as follows. Recall, if we have

With that in mind, we could extend it. If we sample from a standard normal distribution, we could convert it to any Gaussian we want if we know the mean and the variance. Hence we could implement our sampling operation of

where

Now, during backpropagation, we don’t care anymore with the sampling process, as it is now outside of the network, i.e. doesn’t depend on anything in the net, hence the gradient won’t flow through it.

def sample_z(args): mu, log_sigma = args eps = K.random_normal(shape=(m, n_z), mean=0., std=1.) return mu + K.exp(log_sigma / 2) \* eps

# Sample z ~ Q(z|X)z = Lambda(sample_z)([mu, log_sigma])Now we create the decoder net

# P(X|z) -- decoderdecoder_hidden = Dense(512, activation='relu')decoder_out = Dense(784, activation='sigmoid')

h_p = decoder_hidden(z)outputs = decoder_out(h_p)Lastly, from this model, we can do three things: reconstruct inputs, encode inputs into latent variables, and generate data from latent variable. So, we have three Keras models:

# Overall VAE model, for reconstruction and trainingvae = Model(inputs, outputs)

# Encoder model, to encode input into latent variable# We use the mean as the output as it is the center point, the representative of the gaussianencoder = Model(inputs, mu)

# Generator model, generate new data given latent variable zd_in = Input(shape=(n_z,))d_h = decoder_hidden(d_in)d_out = decoder_out(d_h)decoder = Model(d_in, d_out)Then, we need to translate our loss into Keras code:

def vae_loss(y_true, y_pred): """ Calculate loss = reconstruction loss + KL loss for each data in minibatch """ # E[log P(X|z)] recon = K.sum(K.binary_crossentropy(y_pred, y_true), axis=1) # D_KL(Q(z|X) || P(z|X)); calculate in closed form as both dist. are Gaussian kl = 0.5 * K.sum(K.exp(log_sigma) + K.square(mu) - 1. - log_sigma, axis=1)

return recon + kland then train it:

vae.compile(optimizer='adam', loss=vae_loss)vae.fit(X_train, X_train, batch_size=m, nb_epoch=n_epoch)And that’s it, the implementation of VAE in Keras!

Implementation on MNIST Data

We could use any dataset really, but like always, we will use MNIST as an example.

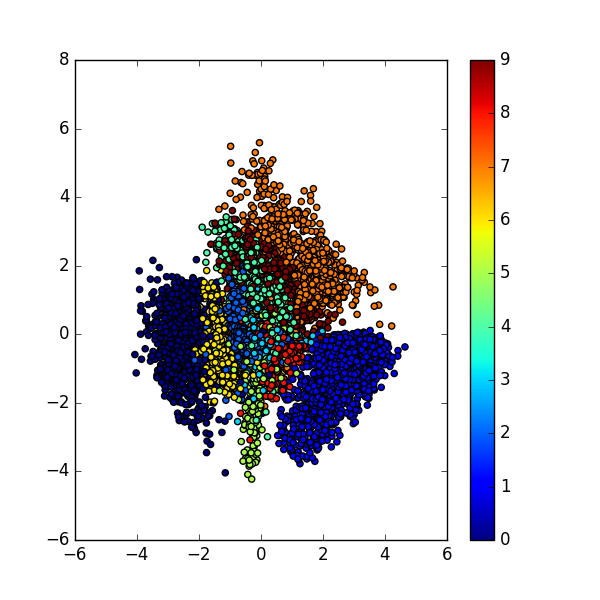

After we trained our VAE model, we then could visualize the latent variable space

As we could see, in the latent space, the representation of our data that have the same characteristic, e.g. same label, are close to each other. Notice that in the training phase, we never provide any information regarding the data.

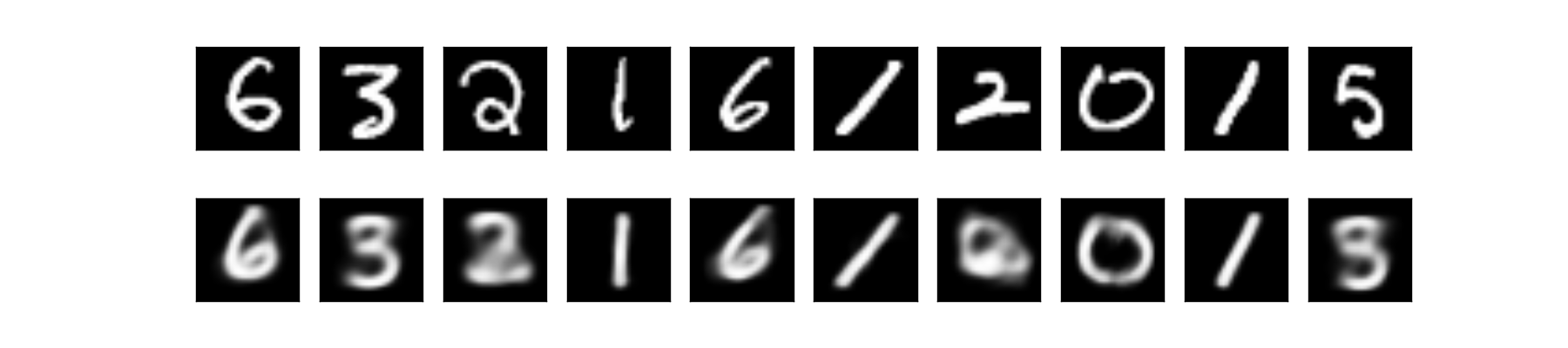

We could also look at the data reconstruction by running through the data into overall VAE net:

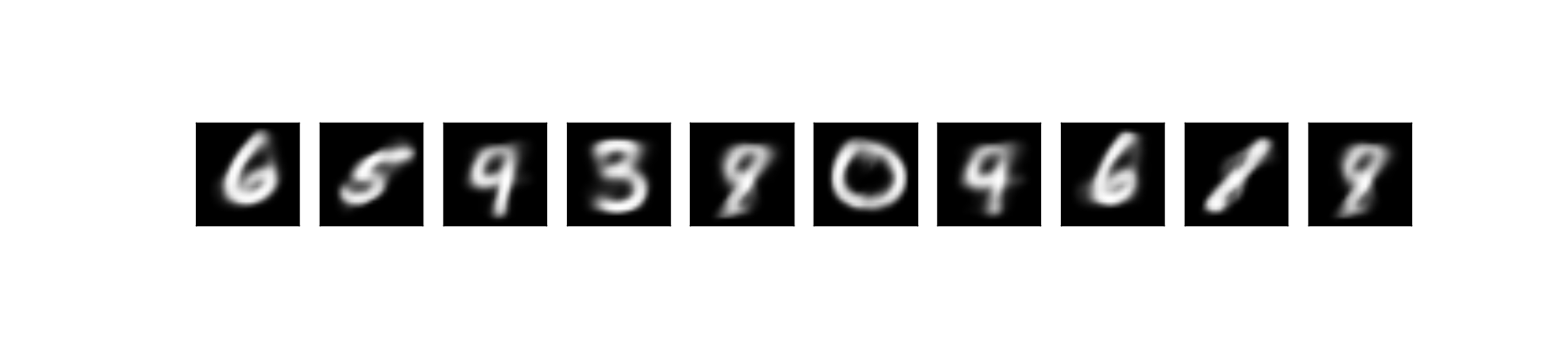

Lastly, we could generate new sample by first sample

If we look closely on the reconstructed and generated data, we would notice that some of the data are ambiguous. For example the digit 5 looks like 3 or 8. That’s because our latent variable space is a continous distribution (i.e.

Conclusion

In this post we looked at the intuition behind Variational Autoencoder (VAE), its formulation, and its implementation in Keras.

We also saw the difference between VAE and GAN, the two most popular generative models nowadays.

For more math on VAE, be sure to hit the original paper by Kingma et al., 2014. There is also an excellent tutorial on VAE by Carl Doersch. Check out the references section below.

The full code is available in my repo: https://github.com/wiseodd/generative-models

References

- Doersch, Carl. “Tutorial on variational autoencoders.” arXiv preprint arXiv:1606.05908 (2016).

- Kingma, Diederik P., and Max Welling. “Auto-encoding variational bayes.” arXiv preprint arXiv:1312.6114 (2013).

- https://blog.keras.io/building-autoencoders-in-keras.html